Welcome!

Learn more about intelligent agents, and start building intelligent applications with the Brahms Agent Language, originally developed at NASA. The Brahms Agent Language is a general artificial intelligence language for developing intelligent distributed systems.

What are intelligent agents?

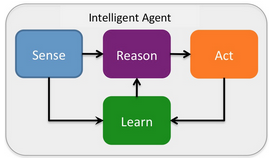

An intelligent agent (opens in a new tab) is a computer program or system that is situated in an environment (i.e. it is able to detect or receive facts about the environment) and that is capable of autonomous action in order to meet its delegated objectives (i.e. goals and actions). Intelligent agents are autonomous in that they can sense, reason, learn and act independently.

For more details see An Introduction to MultiAgent Systems, 2nd ed., Michael Wooldridge, 2009 (opens in a new tab) and Artificial Intelligence: A Modern Approach (3rd Edition), Stuart Russel and Peter Norvig, 2009 (opens in a new tab).

What is agent-oriented programming?

Agent-oriented programming (AOP) (opens in a new tab) is a programming paradigm where the development of the software is centered on the concept of "agents." Object-oriented programming (OOP) is a programming paradigm based on the concept of "objects", which are data structures that contain data, in the form of fields, often known as attributes; and code, in the form of procedures or functions, often known as methods. A distinguishing feature of objects is that an object's methods can access and often modify the attributes of the object with which they are associated (objects have a notion of "this").

In contrast to object-oriented programming, which has objects at its core, AOP has agents at its core. Agents can be thought of as abstractions of objects with special capabilities. Intelligent Agents are agents that are:

- Autonomous: An agent acts independently; and agent's action choices depend on its own experience (through sensing and learning), rather than on knowledge of the environment that has been built-in by the developer. Code is not executed by calling methods, but runs continuously in an individual thread and the agent decides when it should perform some action.

- Proactive: Agents have capabilities for action selection, prioritization, goal-directed behavior, and decision-making, all without intervention from a higher-level agent or object.

- Reactive: Agents can can perceive the context (e.g. their environment) in which they operate and can react autonomously to it, as appropriately.

- Social: Agents are able to engage other agents, systems or people through communication and coordination, and they may collaborate on a task.

With these capabilities, Intelligent Agents can represent the autonomous, social and intelligent behavior of people, systems and organizations.

What is Brahms?

Brahms is an Agent-Oriented Language for developing Intelligent Agents. Brahms falls in the category of Belief-Desire-Intention architecture (opens in a new tab) and Cognitive architectures (opens in a new tab).

Agents are first-class citizens in the Brahms language, which means that they can be passed as a parameter, be assigned to a variable, send messages to and receive messages from other agents. Moreover, Brahms agents are belief-based and allow you to encapsulate intelligent behavior based on their beliefs. We can model an agent organization using group-membership inheritance. You can create many agents, each with their own encapsulated behavior, just as you can create many objects in an object-oriented language.

What is the history of Brahms at NASA?

Brahms applications were developed in the Intelligent Systems Division at NASA Ames Research Center. The main contributors to the development of Brahms were:

- Maarten Sierhuis - Co-founder of Ejenta, previously Senior Scientist at NASA Ames Research Center.

- Bill Clancey (opens in a new tab) - Senior Scientist at the Institute of Human Machine Cognition, previously Chief Scientist for Human-Centered Computing at NASA Ames Research Center.

- Ron van Hoof - Senior Research Associate at the Institute of Human Machine Cognition, previously Senior Software Engineer at NASA Ames Research Center.

- Chin Seah - Senior Software Engineer at Ejenta, previously Senior Software Engineer at NASA Ames Research Center.

- Mike Scott - Senior Software Engineer at at NASA Ames Research Center

What did NASA develop with Brahms?

At NASA, Brahms was used to build a number of systems and applications:

-

OCA Management System (OCAMS) - International Space Station Mission Control

The Orbital Communications Adaptor Management System (OCAMS) is the first application of intelligent multi-agent system (MAS) technology in NASA's mission control operations. OCAMS is a multi-agent software system (MAS) that has been running 24x7 in NASA's Houston Mission Control Center since July 2008. Since 2012, OCAMS completely automates the work previously done by the OCA Officer flight controllers and saves NASA's Mission Control over $2M in operational costs per year. See more details (opens in a new tab).

OCA Officer flight controllers in NASA's International Space Station Mission Control Center, at NASA Johnson Space Center, used to use different computer systems to uplink, downlink, mirror, archive, and deliver files to and from the International Space Station (ISS) in real time. Today, OCAMS is at Release 4.5 and has completely automated the work of the OCA Flight Controller. OCAMS consists of more than 20 independent software agents running on three different computers, integrating three secure communication networks, including the communication network to and from the International Space Station. OCAMS was developed using a combination of the Brahms agent-oriented language and the Java object-oriented language.

-

The Mobile Agents Architecture - Human-Agent-Robot Interaction

From 2001-2006, researchers from NASA Ames Research Center developed an advanced multi-agent EVA communications system to increase astronaut self-reliance and safety and to reduce dependence on continuous monitoring and advice from Mission Control. This system, called the Mobile Agents Architecture (MAA), is voice controlled and provides information verbally to the astronauts through "personal agents." The system partially automates the role of CapCom in Apollo, including monitoring and managing EVA navigation, scheduling, equipment deployment, telemetry, health tracking, and scientific data collection. EVA data are stored automatically in a shared database in the habitat and/or vehicle and mirrored to a site accessible by a remote science team.

In 2004, researchers tested the system at the Mars Desert Research Station (MDRS) in Utah. During this extensive field test an EVA robotic assistant (ERA) followed geologists approximately 150m through a winding, narrow canyon. On voice command, the ERA took photographs and panoramas and was directed to move and wait in various locations to serve as a relay on the wireless network.

In 2006, researchers extended the MAA to include software agents that monitor the power systems inside the MDRS. The "Power Agents" monitor the power generation system and power consumption of the MDRS providing real-time voice notifications and natural language query capability. See it in action (opens in a new tab).

In 2006, the MAA was used during NASA's Desert RATS field test at NASA's Mission Control in Houston and at Meteor Crater in Arizona. The MAA was used to remotely command a lunar rover from Mission Control using voice-commanded personal agent for the flight controller in Mission Control, as well as for the robots and astronauts at Meteor Crater. See it in action.

The MA architecture is applicable to situations that require map navigation, interacting with piloted and autonomous vehicles, and human-robot remote team collaboration. The MA Architecture (MAA) is developed using the Brahms agent-oriented language and the Java object-oriented language.

-

MRA - Health Monitoring of Astronauts

NASA developed the MRA (Metabolic Rate Advisor) to serve as a personal assistant for astronauts working outside a spacecraft. The MRA system consists of a personal agent for the astronaut, running in his or her space suit. The astronaut interacts with the personal agent using an open mic speech dialog interface, and acts as a proxy for the flight surgeon when there is no communication with the flight surgeon possible (e.g. while on Mars). The MRA system continuously monitors the astronaut's health and extra-vehicular activity (EVA) plan, and is able to advise the astronaut about his health and work task by continuously predicting the astronaut's metabolic rate based on all health and space suit sensor data. The complete system (opens in a new tab) was tested at Johnson Space Center in the POGO (Partial Gravity Simulator) with astronauts.

How is Brahms being used by Ejenta and others?

-

Intelligent Agents for Remote Monitoring

Ejenta is developing a personal assistant platform in the cloud, with intelligent agents that are able to understand users' daily activities, monitor adherence to plans, and offer personalized advice and support. The Ejenta platform integrates wearable and environmental sensors that gather real time behavior data, with a team of intelligent agents running in the cloud. Users can interact with their personal agents via mobile devices, spoken natural language and chat interfaces. Ejenta is working with a number of large commercial and government partners to apply this platform to remote patient health monitoring and health monitoring of first responders.

-

Academic and Research Use

Brahms has been used by hundreds of academic and research institutions and students in several countries. See below for other examples of Brahms Applications.

Have other Brahms applications that you'd like us to share? Get in touch with us at brahms@ejenta.com.

What is Ejenta's relationship to Brahms and NASA?

The two organizations entered into a mutual license agreement that allowed NASA to license Brahms from Ejenta and to make NASA Brahms applications available to the outside world.

Get Brahms

How can I get access to the Brahms Agent Environment?

If you are interested in developing intelligent applications with Brahms, visit the Developers page to request a license.